- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-17-2017 08:37 PM - edited 03-01-2019 05:17 AM

Hi All,

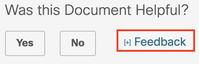

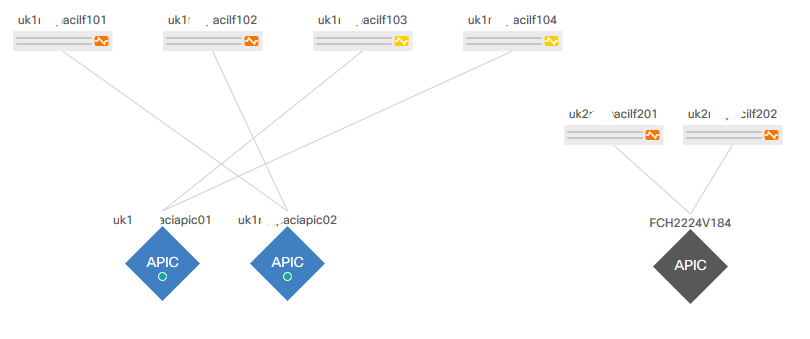

After commissioning the second pod in a Multipod implementation, all leaf and spine nodes in the second pod have been discovered and commissioned, however the APICs in the second pod are not reachable after following the initial configuration.

Both APICs in the second pod (there are two in the seed pod) were configured using the same values as the APICs in the seed pod however after the initial configuration dialogue I have not been able to log back into them using the admin password or the rescue-user. The leaf node interfaces to which they are attached show a status of "(out-of-service)".

Will I need to reset the admin password using the USB password reset method, or is there an alternate method by which to clear the configuration of an APIC that is otherwide inaccessible?

Cheers,

-Luke

Solved! Go to Solution.

- Labels:

-

Cisco ACI

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-18-2017 12:44 AM

Luke,

How did you configure the initial setup of the APICs in pod 2?

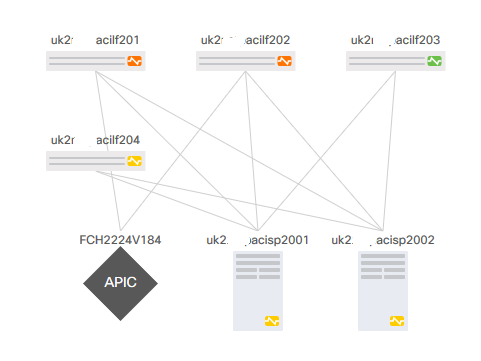

APICs in pod 2 must use the same fabric ID as pod 1 but the pod ID should be pod 2 (or whichever pod they exist in). In the initial setup for a pod 2 APIC, you must enter the pod 1 TEP pool.

If you have 2 APICs in pod 1, then the APIC in pod 2 should be APIC 3. It sounds like you have a fourth APIC, this will not be actively in the cluster. It is only to be used as a standby APIC assuming the target size is 3 and not 5. Target size of 4 is not supported.

You will not be able to log into the APICs admin user because it has not synced with APIC 1. If the APIC has not been discovered, then you can use 'rescue-user' as the username.

If all of configurations mentioned above check-out fine, then I would recommend looking into LLDP between the APICs and leaf nodes of pod 2.

leaf - show lldp neighbor

APIC - show lldptool [in/out] eth2-1 (may need to enter the bash shell using the 'bash' command in order to use the lldptool command)

Jason

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-18-2017 12:44 AM

Luke,

How did you configure the initial setup of the APICs in pod 2?

APICs in pod 2 must use the same fabric ID as pod 1 but the pod ID should be pod 2 (or whichever pod they exist in). In the initial setup for a pod 2 APIC, you must enter the pod 1 TEP pool.

If you have 2 APICs in pod 1, then the APIC in pod 2 should be APIC 3. It sounds like you have a fourth APIC, this will not be actively in the cluster. It is only to be used as a standby APIC assuming the target size is 3 and not 5. Target size of 4 is not supported.

You will not be able to log into the APICs admin user because it has not synced with APIC 1. If the APIC has not been discovered, then you can use 'rescue-user' as the username.

If all of configurations mentioned above check-out fine, then I would recommend looking into LLDP between the APICs and leaf nodes of pod 2.

leaf - show lldp neighbor

APIC - show lldptool [in/out] eth2-1 (may need to enter the bash shell using the 'bash' command in order to use the lldptool command)

Jason

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-18-2017 04:10 AM

Hi Jason,

Thanks, I had checked the LLDP neighbor status earlier and could see the correct device names and remote port IDs, but after executing show lldp neighbor detail just now, I can see from the management address reported in the output that I have mistakenly specified the TEP pool range of the second pod rather than the first pod.

I''m guessing that the APICs in the second pod will never be discovered correctly, so is there any way in which to factory reset them to perform the initial configuration again?

Cheers,

-Luke

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-18-2017 11:15 AM

Luke,

Access the problem APICs via KVM console and login with rescue-user. The rescue-user password should be the last admin password set or none if never set. If that does not work, you can mount the APIC.ISO image via the KVM and perform a fresh install of the same image and it should boot up and you can start the setup utility again.

T.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

07-18-2017 06:08 PM

After some further attempts this morning, I was finally able to login to both misconfigured APICs in the second cluster using the rescue-user and the password 'password' (note that I have never used the password 'password' in setting up any component of either fabric).

I was then able to clear the configuration, reboot, and complete the setup using the correct TEP pool.

Thanks for all your help guys.

-Luke

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-06-2017 02:58 AM

Hi Tomas,

I have a dude with initial setup, on the apic 3 in the pod 2, does the infra vlan have the same id as in APICs in pod 1?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-17-2017 04:06 AM

POD ID : ?

Controller ID : ?

Controler name : ?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-25-2017 01:05 AM

Hi Jason,

How come on Cisco documentation for Multipod, a POD = a Fabric? Which means multi fabric with multiple Fabric ID's? This is confusing as seen below

|

Multipod |

You can use partial fault isolation with one control plane but isolated data planes across pods. A multipod solution allows a single APIC cluster to manage multiple Cisco ACI fabrics in which each fabric is a pod. The multipod fabric can be between different floors or buildings within a campus or a local metropolitan region. Each pod is a localized fault domain. |

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

10-25-2017 11:43 AM

Hi TFashy, welcome to the forum.

First, a tip. If you have a new question, ask a new question. Don't try and resurrect an old thread. This item came to my inbox titled Re: Luke - which on a normal day would not entice me to even read it!

But to your question:

How come on Cisco documentation for Multipod, a POD = a Fabric?

The documentation is indeed confusing and unclear. It should say:

A multipod solution allows a single APIC cluster to manage multiple Cisco ACIfabricspods in which eachfabricset of interconnected leaves and spines is a pod. The multipod fabric can be between different floors or buildings within a campus or a local metropolitan region. Each pod is a localized fault domain.

Cisco write thousands of pages of documentation and most of it is excellent quality, but even better still, you'll see that near the top of almost any Cisco document, in the right hand margin is a Feedback option.

I've found that the Cisco documentation team are very responsive to acting on feedback, so when you find something like this, you can always let them know. In fact, I've already done so for this document in response to your question.

I hope this helps

Don't forget to mark answers as correct if it solves your problem. This helps others find the correct answer if they search for the same problem

Forum Tips: 1. Paste images inline - don't attach. 2. Always mark helpful and correct answers, it helps others find what they need.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

09-13-2018 04:39 AM

I have followed the details in the previous posts, but no joy. I can see DC1(POD1) APICs & DC2(POD2) standby APIC, but not the 3rd APIC in DC2.

I have rebuilt both APICs in DC2 to see if that was the issue, same results, no matter what I do, I just see the standby APIC.

Any ideas?

Discover and save your favorite ideas. Come back to expert answers, step-by-step guides, recent topics, and more.

New here? Get started with these tips. How to use Community New member guide